Identification of dental implant methods from low-quality and distorted dental radiographs utilizing AI educated on a big multi-center dataset

Ethics statement

All procedures were in accordance with the ethics committee and with the principles of the Declaration of Helsinki. This study was approved by the Institutional Review Board of Wonkwang University Daejeon Dental Hospital (approval No. W2104/003-001), and the need for informed consent was waived due to the retrospective nature of the study. This study was conducted in accordance with the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) and AI in dental research guidelines15,16.

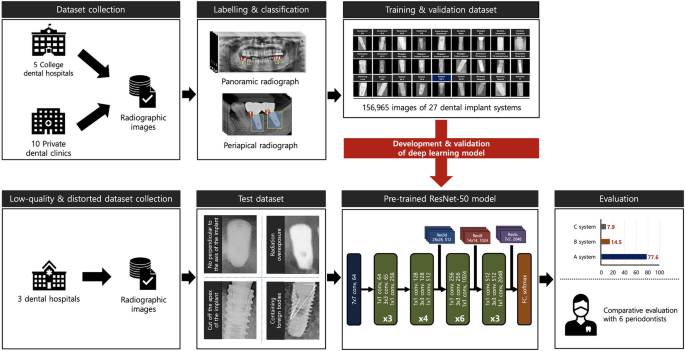

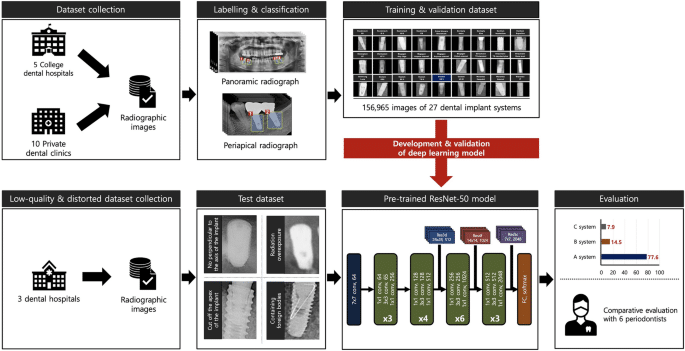

Training and validation datasets

Large-scale and multi-center-based DIS radiographic images, managed and supervised by the National Information Society Agency and the Ministry of Science and Information and Communication Technology, were used to train and validate the deep learning model in this study. The dataset was collected from five college dental hospitals and 10 private dental clinics in 2021 and was released to limited researchers through the AI-HUB platform (www.aihub.or.kr) in 2022. In order to obtain a dataset optimized for deep learning training, each digital imaging and medical communication format-based radiograph was converted to a JPEG file, with a single implant fixture isolated as a single region of interest (ROI) image. Subsequently, each ROI was labeled with the manufacturer, brand and system, diameter and length, placement position, date of surgery, age, and sex using customized image processing, labeling, and annotation tools. The entire dataset was subjected to a rigorous quality assurance process by a board-certified oral and maxillofacial radiologist and a panel of 10 dental experts affiliated with the Korean Academy of Oral and Maxillofacial Implantology to validate brightness, contrast, resolution, image quality, and distortion (see Table S1 of the supplemental information).

The final training and validation datasets consisted of 156,965 panoramic and periapical radiographs representing 10 manufacturers, including Dentium (n = 41,096, 26.2%), Dentsply (n = 15,296, 9.7%), Dioimplant (n = 1530, 1.0%), Megagen (n = 7,801, 5.0%), Neobiotech (n = 21,260, 13.5%), Nobel Biocare (n = 3644, 2.3%), Osstem (n = 42,920, 27.3%), Shinhung (n = 3376, 2.2%), Straumann (n = 4977, 3.2%), and Warantec (n = 15,065, 9.6%) (Table 1). The dataset was randomly and equally partitioned into training (n = 125,572, 80%) and validation (n = 31,393, 20%) subsets. While the total number of training datasets is large, the number of DISs in each is uneven and highly imbalanced. Therefore, before applying the test dataset to the deep learning model, the training subset was augmented tenfold and was used as follows to reduce deviation: random rotations (with a range of 180°), hue adjustments (from − 0.2 to 0.2), brightness modulation (from − 0.12 to 0.12), contrast changes (from 0.5–1.5), zoom changes (from 0.5 to 1.5), noise addition (0.05), and horizontal and vertical flips.

Test dataset

The test dataset, which is completely independent of the training and validation datasets, was obtained based on the DIS dataset used in our previous multi-center study17. The panoramic and periapical radiographic images were collected from three dental hospitals: Daejeon Dental Hospital, Wonkwang University; Ilsan Hospital, National Health Insurance Service; and Mokdong Hospital, Ewha Womans University. Of the DIS images included in the raw test dataset, low-quality and distorted radiographs were included in the final test dataset for this study according to the following criteria: (1) lack of perpendicular alignment to the implant fixture axis, (2) radiation overexposure, (3) cut off the apex of the implant fixture, and (4) presence of foreign bodies. Consequently, the test dataset used in this study included 586 panoramic and periapical radiographs representing nine different types of DIS, including Dentsply Astra OsseoSpeed TX (n = 14, 2.4%), Nobel Biocare Brånemark System MkIII TiUnite (n = 12, 2.0%), Dentium Implantium (n = 116, 19.8%), Shinhung Luns S (n = 8, 1.4%), Straumann SLAactive BL (n = 74, 12.6%), Straumann SLAactive BLT (n = 20, 3.4%), Straumann Standard Plus (n = 8, 1.4%), Dentium Superline (n = 90, 15.4%), and Osstem TSIII (n = 244, 41.6%) (see Table S2 of the supplemental information).

DL algorithm

ResNet-50 algorithm belongs to the ResNet family of deep convolutional neural network architectures, introduced by He et al. in 201518. The ResNet model has achieved state-of-the-art results in various computer vision benchmarks and has demonstrated strong performance in previous DIS identification studies using two-dimensional dental radiographs13,19. Specifically, the ResNet-50 algorithm consisted of 50 deep layers (including one initial convolutional layer, 16 residual blocks of 3 layers each, and one fully connected layer), and these connections bypassed one or more layers and feed the output from one layer directly into a later layer, effectively allowing the model to learn identity functions. All included images were resized to 224 × 224 pixels and entered into a fine-tuned pre-trained ResNet-50 algorithm. All deep learning processes were performed in MATLAB 2023a (MathWorks, Natick, MA, USA) and Python 3.11 (Python Software Foundation, Wilmington, DE, USA) using the TensorFlow framework. The hyperparameter optimization process was carried out systematically using iterative trial and error strategies. Specifically, the Adam optimizer was chosen for its adaptive learning rate capabilities. The batch size was set to 32 to balance computational efficiency and training stability. The learning rate was set to 0.001 to ensure gradual convergence without overshooting the minimum of the loss function. Training was performed for a maximum of 100 epochs. To avoid overfitting and to optimize computational resources, we included an early stopping mechanism that terminated the training process if there was no improvement in the validation set loss for more than 10 epochs (Fig. 1).

Self-reported questionnaire

In this study, a comparative analysis of the accuracy performance of DIS classification was conducted between AI and five periodontists from Jeonbuk National University Dental Hospital. Based on 586 low-quality and distorted radiographs, a self-report questionnaire was developed by one researcher who was not involved in the study. Pre-classification training and calibration sessions were conducted to improve diagnostic accuracy by aligning periodontists’ judgement with established standards. Subsequently, standardized radiographs and illustrations of nine types of DISs were provided prior to the survey, and the survey was completed individually and independently without further information.

Statistical analysis

Categorical and continuous variables were expressed as frequencies (n), percentages (%), 95% CIs, range (minimum–maximum), and median values. Regarding the accuracy assessment of the low-quality and distorted radiographs, the following metrics were used: Accuracy was calculated as (true positive [TP] + true negative [TN])/(TP + TN + false positive [FP] + false negative [FN]); precision was defined as TP/(TP + FP); recall was calculated as TP/(TP + FN); and the F1 score was determined by 2 × (precision × recall)/(precision + recall). Furthermore, the areas under the receiver operating characteristic (ROC) curves (AUCs) and the normalized confusion matrix were presented. All deep learning processes were performed in MATLAB 2023a (Deep Network Designer package, MathWorks, Natick, MA, USA) and Python 3.11 (Keras framework in Python, Python Software Foundation, Wilmington, DE, USA) using the TensorFlow framework.